The Beauty And Art Of Rust Service Infrastructure

Hello my lovelies, remember that time almost a year ago when I decided to run this website off a small CDN I made from scratch using a bunch of snakes, glue, and a little rust?

Yeah so quick update on that. And I guess it should not be a surprise to you, but I have some news about how it's held up so far…

There have been… absolutely NO ISSUES.

I'm completely serious, I have not had to touch it ONCE since I set it up.

WHAT THE FUCK. AM- AM I A GOOD PROGRAMMER??

I know my number one piece of advice to people is to just "not write bugs" and "do it right the first time." But that's just me fucking around. I didn't know it was even possible to do this?!?!

It fucking WORKS.

So with this great success, you might wonder why I've decided to completely scrap it and rewrite everything entirely into a single Rust project. But honestly, you should really know better by now.

It's Rust.

C'mon mate. It's RUST. Me and Ferris the Crab are BROS. You gotta support your bros!!

Ok there are a few other reasons.

- I wanted to switch hosting providers and realised that setting everything up again would be super annoying. I want to just plop a single binary into a server and have it work.

- I want to make more tools. WE NEED MORE TOOLS. Yes I'm not over that. I still don't understand what the fuck they were talking about.

- The DNS server (written in Rust) was the only component of that mess I was somewhat happy with. So I think it would be a good idea to move it all to Rust and be happy with everything.

- I have recently become filled with an intense confidence that this wouldn't be very hard.

And with that all said, I present to you: b8s, my container orchestration system/content delivery network/load balancing cluster. (1) (1) I have learnt more buzzwords.

I'll get into what it does and how it all works in a second but first, I want to tell you a story of how one of the more important aspects of the system was created.

So I was catching up with one of my old co-workers, and I was telling him about this plan to make my own Kubernetes (in Rust) for a single website that nobody reads.

(2)

(2)

Ok, I keep saying that nobody reads this blog. But the truth is that I actually have no idea. There's no analytics and I'm not interested in parsing nginx logs. The only indication I get is that sometimes I very occasionally get "fan mail." But I don't know what the "fan mail" to reader ratio normally looks like, so I still can't tell.

I'm going to keep saying nobody reads this blog because I think it's funnier.

And I was going through the list of things I needed to implement.

- A DNS server with geolocation routing.

- An Automatic Certificate Management Environment (ACME) implementation for managing SSL certificates.

- A scheduling environment to manage and update the above two.

"What about an overlay network?" he asked.

"That seems like a lot of effort. The idea is that everything is done through b8s, and managing wg-quick through code kinda goes against that. The only way to do that would be to embed WireGuard into it, and I feel that wouldn't be very easy."

"It shouldn't be. WireGuard isn't that complicated, I'm sure you can figure it out."

"Ugh fine, I'll look into it."

Normally I would ignore anyone's suggestion about programming because I very clearly know better than everyone else, especially when it's about one of my personal projects. But this dude is probably the best engineer I know, so I felt like I had to at least suss it out.

And so as it turns out, yeah he was completely right. WireGuard? Real fucking easy to add. Got that done in two days.

All I did was embed Cloudflare's userspace Rust implementation of WireGuard called "BoringTun" (that I forked to make a few fixes) into b8s, and implement the routing by going through the docs: "Routing & Network Namespace Integration."

It was honestly insanely simple, like holy shit I could probably make my own Tailscale at this point.

I took a little dive into the WireGuard protocol as well for the first time, and apart from all the cryptography I just glazed my eyes over, it was surprisingly understandable. Way better than when I looked at OpenVPN. I never knew or appreciated how well designed WireGuard was before, I think I've just become a WireGuard fanenby for life.

No, I'm not going to explain any of it to you.

:)

Anyway, that was the story about how making your own tools can help you appreciate new things. Did you like it? Should I do this more often? Email your thoughts to /dev/null.

So b8s is split into two services/binaries, a dom and sub.

(3)

(3)

Yes I know, I am very funny.

The dom is the control plane, basically the source of truth for DNS records and handles managing SSL and WireGuard. This could run off my laptop, but since I'm currently on MacOS, it runs off one of my Linux servers at home. I required there to be no inbound connections to dom so this is pretty safe (or at least safe enough for me).

subs are where the actual blog is located. These are currently spread across three nodes in Australia, the UK, and USA respectively. They also run the DNS nameservers in a similar setup to before.

Since having b8s be easy to setup was one of my most important priorities, let's go through the process of adding a new sub to the network. First from the control plane, I generate the sub binary and create a link to download it. There's a script called send-sub to do this.

#!/bin/bash

set -e

cargo build --bin sub --release

URL=$(curl -sF "file=@target/release/sub" https://file.io | jq '.link')

printf "Init:\ncurl https://git.sr.ht/~beanpup_py/b8s/blob/main/scripts/init-sub | BIN_URL=$URL bash"

printf "\n\nUpdate:\ncurl https://git.sr.ht/~beanpup_py/b8s/blob/main/scripts/update-sub | BIN_URL=$URL bash\n\n"

I don't have a place to store binaries so I build them on the fly as needed and send them to a temporary file storage service. This script tells you to initialise a new sub with this command, where $URL is the URL from the file storage service.

curl https://git.sr.ht/~beanpup_py/b8s/blob/main/scripts/init-sub | BIN_URL=$URL bash

This command is run on a completely new server,

(4)

(4)

Yes I'm piping things directly into bash. It's my script, I know what's in it.

it does an apt update, creates a new user to run the sub under, sets up the firewall ufw, downloads the sub binary and runs it as a systemd service. To connect to the dom, the sub in it's first run will tell you to run this command:

dom add-sub <IPV4_ADDRESS>:<IPV6_ADDRESS>:<AUTH_TOKEN>:<WIREGUARD_PUBLIC_KEY> -- <COORDINATES> <NAME>

All those variables expect NAME are provided, and they should be self-explanatory but lets go through them anyway.

-

IPV4_ADDRESS: The IPv4 address of thesub. -

IPV6_ADDRESS: The IPv6 address of thesub. -

AUTH_TOKEN: Since this is pre-WireGuard connection we need an initial token sent back from thedomto prove it's legitimacy and authenticate itself. -

WIREGUARD_PUBLIC_KEY: Thesubs public key for WireGuard. -

COORDINATES: The geographic coordinates (latitude and longitude) of thesub, used for geolocation. More on this later.

NAME is an identifier for the sub given when we run the command on the dom. Once that command is run, the dom sends a gRPC request to the sub and gives it it's own WireGuard public key and the current DNS records if the sub is also acting as a nameserver.

The sub restarts itself once the gRPC request is received and starts the DNS server (if applicable), the WireGuard connection, and pulls the latest blog image from the Docker registry.

There are a few more commands we run with dom (from here on out, all commands are from dom, we're done with sub).

-

dom geolite: Downloads the latest GeoLite database file and distributes it to allsubs that are nameservers. -

dom ssl: Generates new SSL certificates for the website and each nameserver, and distributes them to allsubs.

So that's 5 commands at most to setup a new node. It should probably be 3 (or even 2 if I start storing binaries), since the final two could just be done automatically after add-sub, but I've been double checking everything after each step so far during testing and haven't gotten around to changing that.

Functionally, it's basically the same as it was before. The biggest difference would be the changes to DNS geolocation. Previously with racine it would change the DNS record based on your continent as described by a yaml file like this.

- name: blog.waifu.church

type: A

value: 95.216.184.160

geo:

- continent: OC

value: 207.148.80.96

- continent: AS

value: 207.148.80.96

- continent: NA

value: 144.202.92.225

- continent: SA

value: 144.202.92.225

This was easy because of how the GeoLite database is structured, but wasn't the best solution because of how broad it was as well as just being really annoying to manually specify values for every continent.

b8s is using a much cleaner solution of finding the closest node to the user from their coordinates. That's why dom needs to know the coordinates of every sub. When choosing what value to give to each A or AAAA record, the sub handling the DNS request will find the nearest sub to the user using the haversine formula (each sub is aware of the coordinates and IP address of every other sub, but can't communicate due to firewall rules).

The coordinates that come from the GeoLite database are actually wildly inaccurate (compared to other IP geolocation services I'm aware of) and are several kilometres off in most cases, but this isn't an issue for us since I'm not going to have nodes that close to each other anyway.

The second biggest change is that I've changed the secondary domain name from blog.waifu.church to quaso.engineering. I needed another domain name to test this with while keeping the existing infrastructure alive and I don't feel like changing it back.

Also I've become obsessed with saying "quaso" at every opportunity now. I don't know why, probably has to do with the fact that it's a funny word, and the meme lightly insults Fr*nch people 🤮.

Do you have any ideas on what to do with waifu.church now? Send your ideas to /dev/null and go into the running to win a free, slightly used 5GB iPod Nano or bag of milk (almost expired).

(5)

(5)

I decide whether you get the iPod or bag of milk.

There's a few things I would still like to add - better monitoring is a big one, there's actual load balancing and failovers too, finishing adding tests would be good as well. But I can't really be bothered to do any of that, maybe next year.

Epilogue

Do you know how bad my urge to rewrite this entire website in Rust is? It's honestly unbearable.

But a website is like the one place I don't think Rust is that good for, I've tried it plenty of times and have never been particularly impressed.

But… my bro. How could I let my bro down like this!??

I dunno what to do… ;(

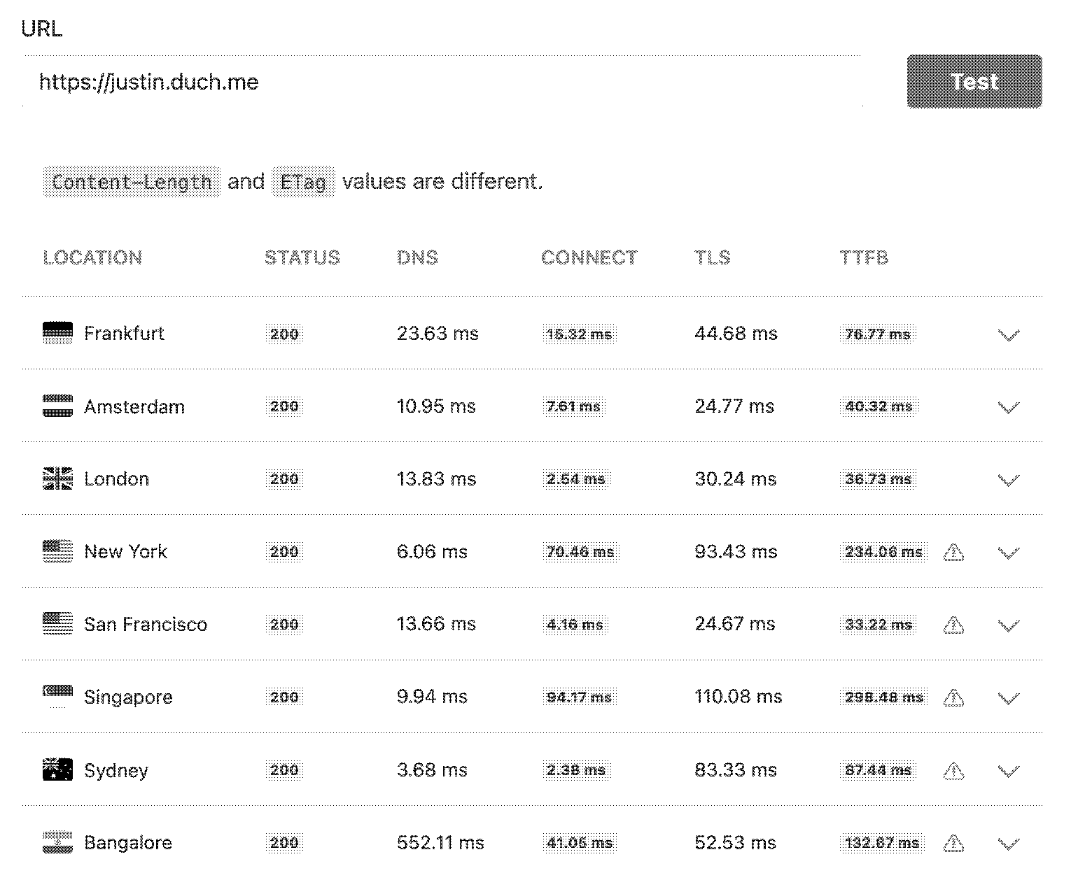

Update 2024-07-19: An additional sub has been added in Singapore for better performance in Asia. I've also found out about KeyCDN's performance test which hits your website from 8 different locations around the world. Here are my results.

Looks okay. I don't know why Singapore has a Time to First Byte (TTFB) over 300ms when there is literally a node right there. I know that node is working since the Bangalore test is going there (it was at over 400ms before the new sub was added). So uhh, like it's still a good enough response time, so it's probably fine to ignore?? I don't wanna do anymore work ;( and it's probably a DNS cache thing anyway.

I'm also ignoring the warning about Content-Length and ETag values being different. The Content-Length is only one byte less, so it's probably okay to ignore????

And I have no idea about wtf an ETag is and I don't care to find out. If I've never heard of it in all my years of doing this shit until now, it probably isn't important. And that's my genuine opinion.

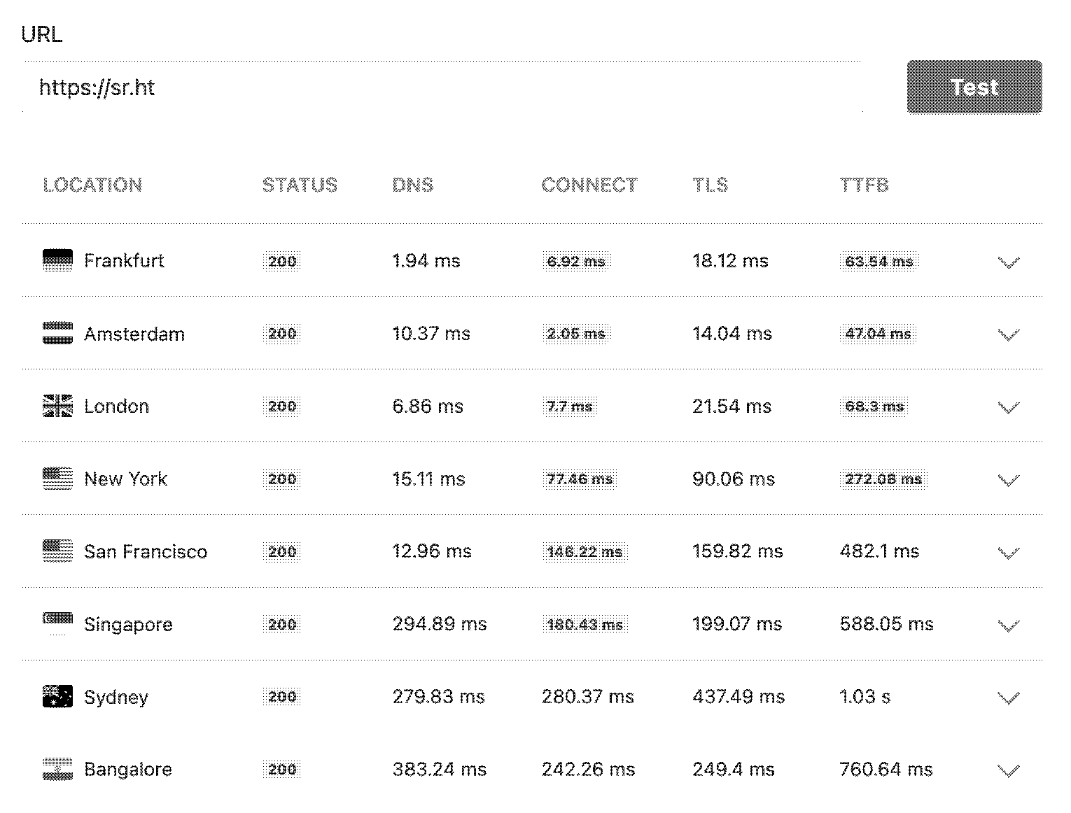

For a reference point, these are the results for my Git hosting provider, sourcehut. I believe they are hosted on a single server in the Netherlands.

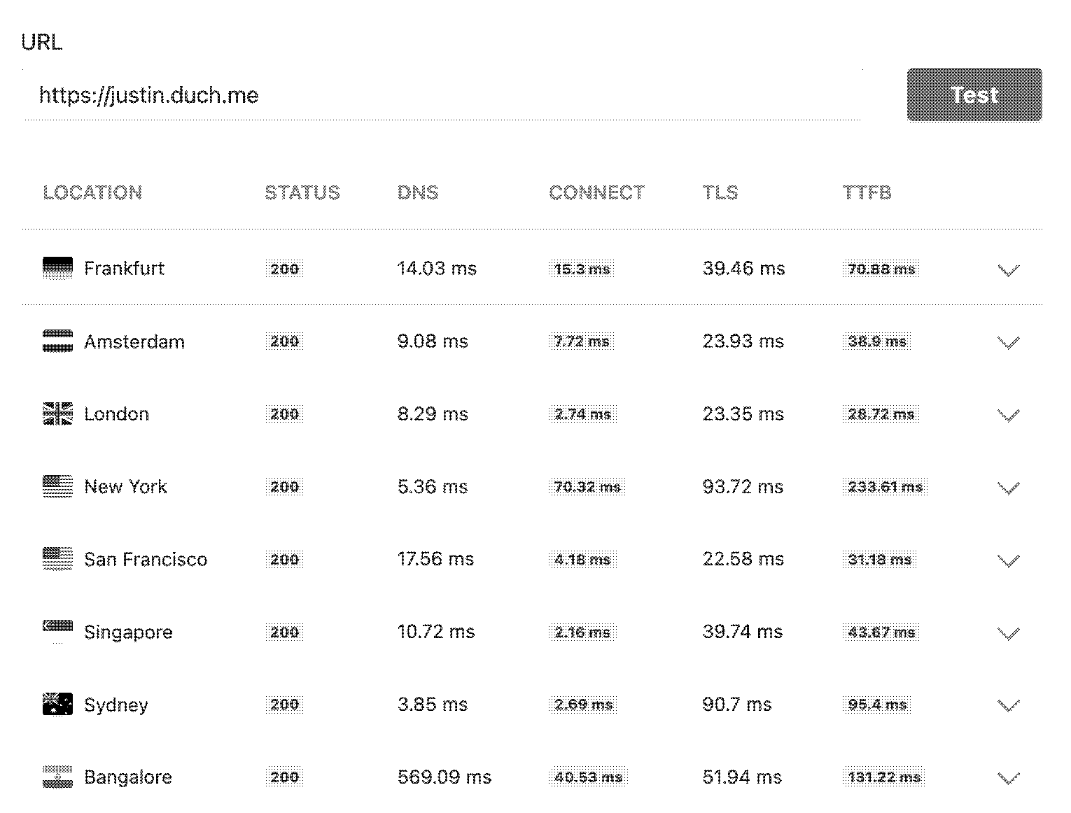

Update a few hours later: Okie dokie, so apparently I can't read for shit. The Content-Length difference between nodes was not only "one" byte, but actually a couple hundred. That is a little more concerning.

So I did some digging and as it turns out, the EU and NA nodes were running out of space which meant the Docker image for the blog was not able to be pulled. (6) (6) How was I supposed to know 5 GB wasn't enough to run this blog off??? That is now fixed, so here is what the performance test looks like frfr.

Looks like the Singapore DNS cache thing is resolved. The ETag warning has also been fixed because it is a value derived from Content-Length.

Yes, I did look up what an ETag was. I'm sorry I lied to you. I thought it would make you think I'm so cool that I wasn't going to be haunted by the fact that I didn't know what an ETag was for the rest of my days. When in reality if I didn't look it up, my mind would have been constantly intruded upon by a little hummingbird pecking at my bean in a way that asks "BUT WHAT THE FUCK IS AN ETAG THO???"

Which I know you think is very uncool ;(